VirusTotal vs Google Safe Browsing vs ScamAdviser vs Scamy.io: Which Tool is Best for Detecting Online Scams?

The internet has changed how we shop, work, and connect. But along with all the opportunities, it also exposes us to scams, phishing, and malware. Spotting a fake website isn’t always straightforward: some scams look completely legitimate, while even genuine small business sites can sometimes appear suspicious.

Security tools can automate much of what a security expert would do, but their approaches differ, and their effectiveness can vary significantly.

In this experiment, we tested the leading security tools alongside our proprietary algorithm to determine which delivers the highest effectiveness.

#1. The Tools at a Glance

Each solution is different and each one takes a different approach to identify dangerous sites:

- VirusTotal: Checks a domain against more than 90+ antivirus engines and reputation services, then reports back if any of them flag it as dangerous. This makes it less of a one-to-one comparison and more like one against ninety.

- Google Safe Browsing: A blocklist service baked into Chrome, Firefox, and other browsers. It quietly blocks known malicious sites in the background.

- ScamAdviser: Uses a mix of technical checks (like WHOIS registration, server location, domain age) plus user reviews to assign a “trust score.” Popular with consumers who want to quickly check if an online shop seems legit.

- Scamy: Our new proprietary solution that applies both static and AI analysis to identify if the business is legitime or not. It was specifically developed to spot scams and fraud-related domains, even if they don’t distribute malware.

Each tool reflects a different philosophy: consensus-driven, blocklist-based, trust-scoring, and AI-driven detection.

That’s what makes the comparison compelling: they’re not simply different versions of the same approach.

#2. Methodology

Testing a website detection tool isn’t straightforward. The internet is messy. Legitimate websites sometimes look shady: say, a small family business with poor design. And sophisticated scams often look clean and professional, complete with SSL certificates and polished branding.

T capture the both sides of the story, we tried build a balanced datase composed of both legitimate and malicious domains:

- Legitimate domains were sampled using the Tranco ranking system, which aggregates popularity across multiple feeds to resist manipulation. Domains were grouped into four tiers based on popularity:

- Tier A: 99 domains randomly sampled from the top 10,000 most visited websites.

- Tier B: 98 domains randomly sampled from ranks 10,001–500,000.

- Tier C: 97 domains randomly sampled from ranks 500,001–1,000,000.

- Tier D: 30 hand-selected domains not present in the Tranco ranking but verified as legitimate smaller-scale services.

- Malicious domains were curated from blackbook and our own threat intelligence feeds. Two subcategories were created:

- Well-Known Malicious: 38 established phishing sites confirmed by major security vendors, listed in public threat feeds.

- Niche Malicious: 20 lesser-known websites targeting smaller markets. These may not always distribute malware but often aim to scam or deceive users.

All automatically selected domains were checked for valid DNS records and HTTP responses to ensure they were active and relevant.

In total, the dataset comprised 324 legitimate domains and 58 malicious domains. The sampled domains are available on this repo.

The final distribution is: 84.8% legitimate and 15.2% malicious.

Although it does not mirror the exact proportions found on the internet, where malicious domains are far less common, this ratio was chosen to provide enough malicious examples for meaningful comparison while preserving a majority of legitimate domains to reflect typical user exposure.

#3. Evaluation

We tested each website on each solution. The response was saved to a csv that was later used to generate the final analysis.

We sorted the outcomes into four categories:

- True Positive (TP): A malicious site correctly flagged as dangerous.

- True Negative (TN): A legitimate site correctly passed as safe.

- False Positive (FP): A safe site incorrectly flagged as dangerous.

- False Negative (FN): A malicious site incorrectly passed as safe.

#3.1 Evaluation Metrics

Each type of mistake has a real-world impact. False positives frustrate users and block legitimate businesses. False negatives expose people to scams and malware.

To understand better the overall performance of the solutions, we took into consideration multiple metrics:

- Accuracy = (TP + TN) / (TP + TN + FP + FN) - Overall correctness across all classifications

- Precision = TP / (TP + FP) - How many flagged domains were actually malicious (reduces false alarms)

- Recall (Sensitivity) = TP / (TP + FN) - How many malicious domains were successfully caught

- Specificity = TN / (TN + FP) - How many legitimate domains were correctly identified as safe

- F1-Score = 2 × (Precision × Recall) / (Precision + Recall) - Balanced measure of precision and recall

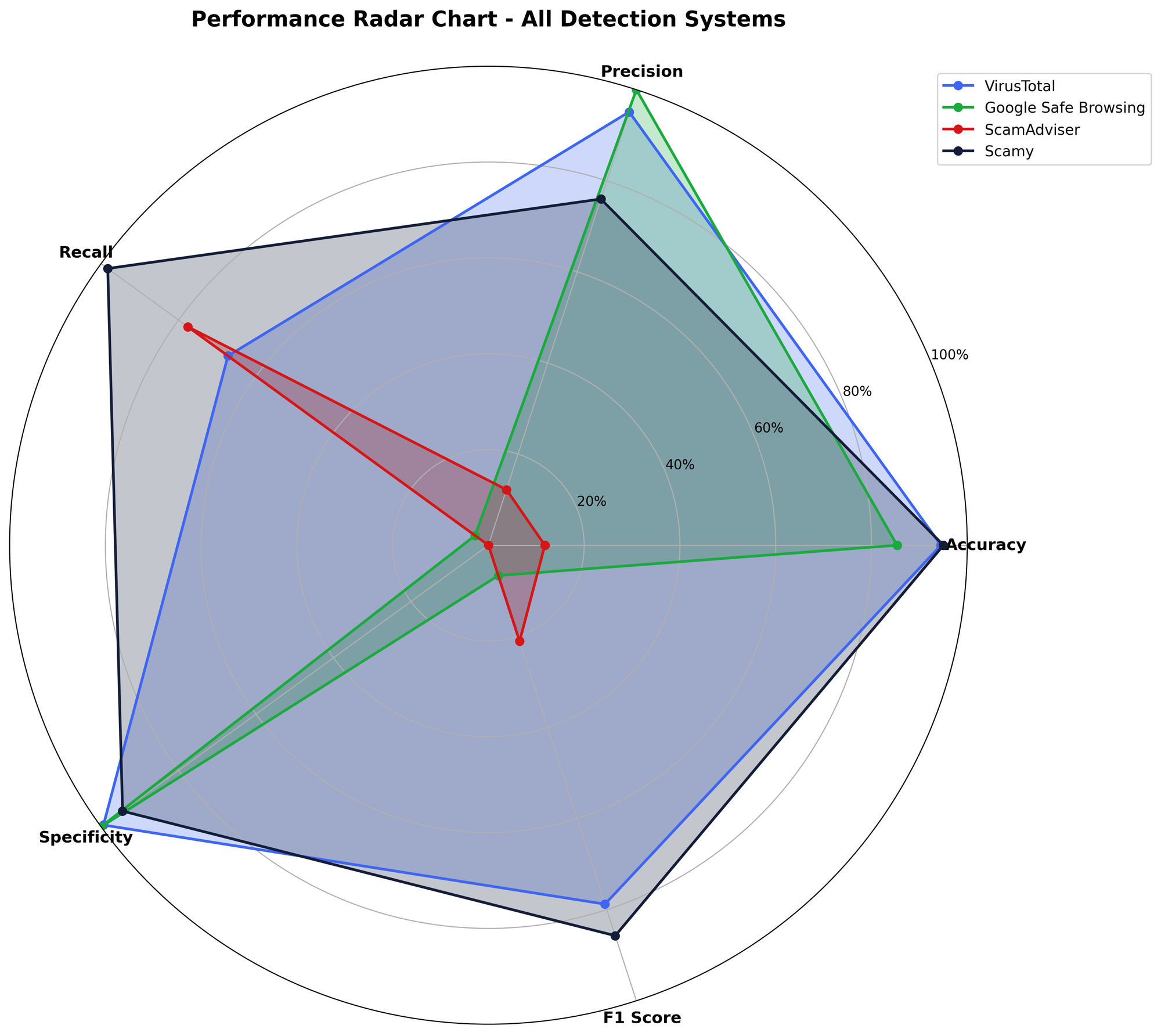

These metrics together give a clearer picture than accuracy alone. For example, Google Safe Browsing had high accuracy simply because most sites are safe, but its recall was dismal. Without recall, you’d never realize it was letting most threats through.

#4. Results

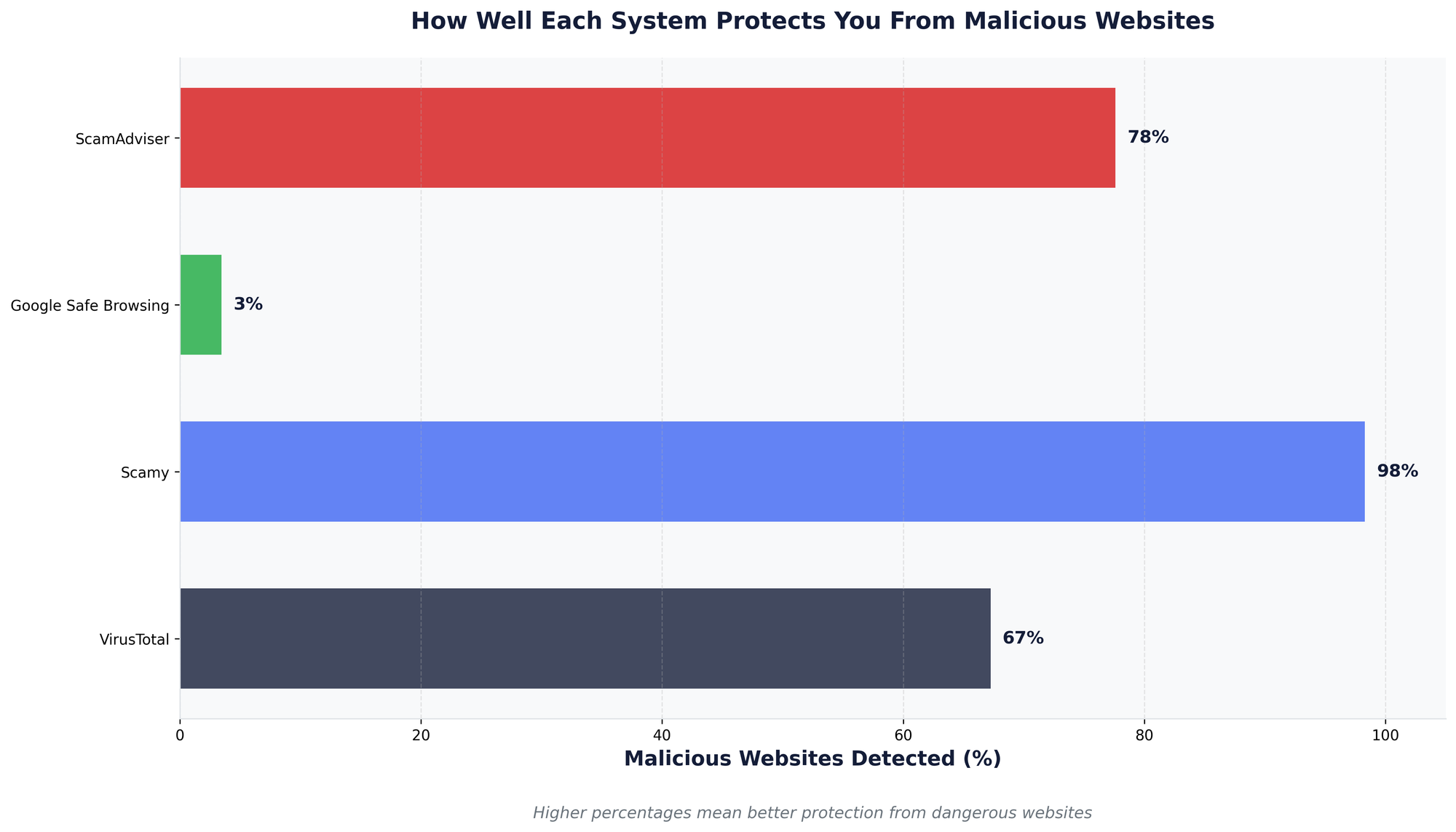

After running all 382 domains through the four systems, the differences were clear. Each tool showed its own strengths and weaknesses depending on whether the goal was avoiding false alarms or catching every possible scam.

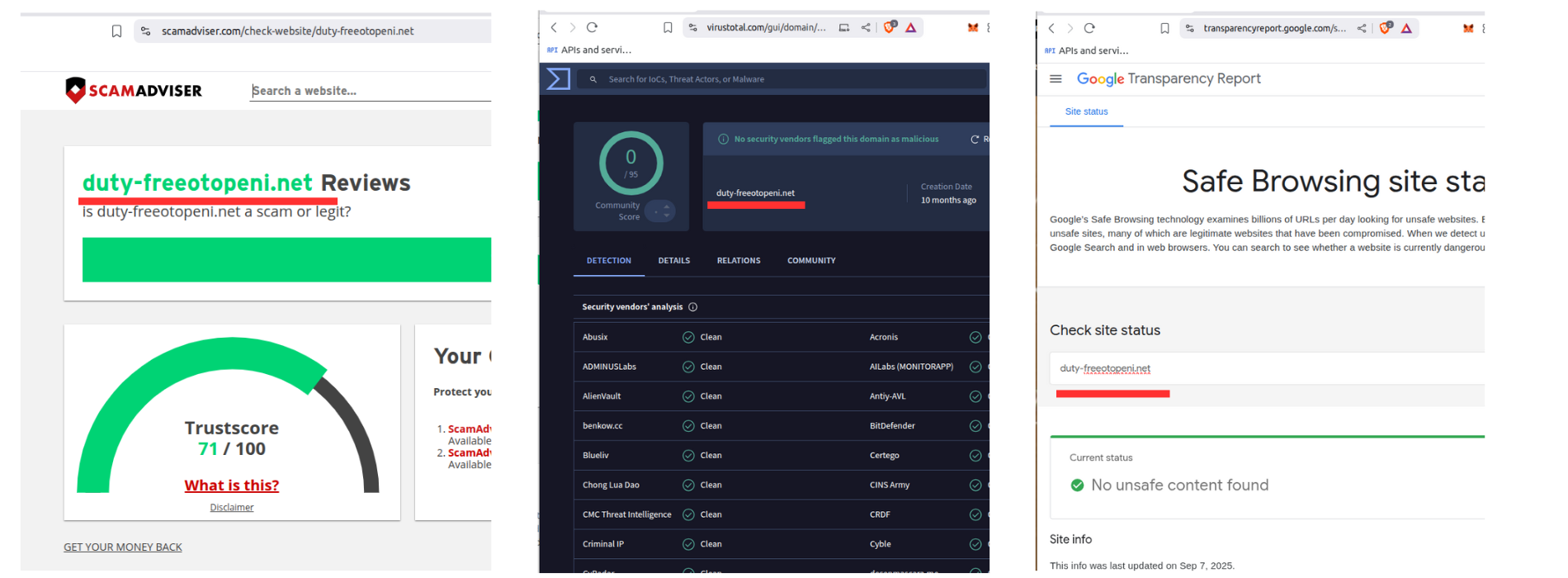

- VirusTotal — 322/324 legitimate (99.4%), 39/58 malicious (67.2%), missed 19 malicious

- Google Safe Browsing — 324/324 legitimate (100%), 2/58 malicious (3.4%), missed 56 malicious

- Scamy — 306/324 legitimate (94.4%), 57/58 malicious (98.3%), missed 1 malicious and 18 legitimate

- ScamAdviser - 45/58 malicious (77.6%) - missed 13 malicious

The first results tell immediately that there is big difference between the solutions overall. Some are highly biased towards very low False Positive rate (Google Safe Browser) while others want to maximize detection and decrease as much as possible the False Negative rate (Scamy.io). The other two have a more balanced approach, trying to make the best of both word.

The chart below represent a comparison of the specificity of each tool.

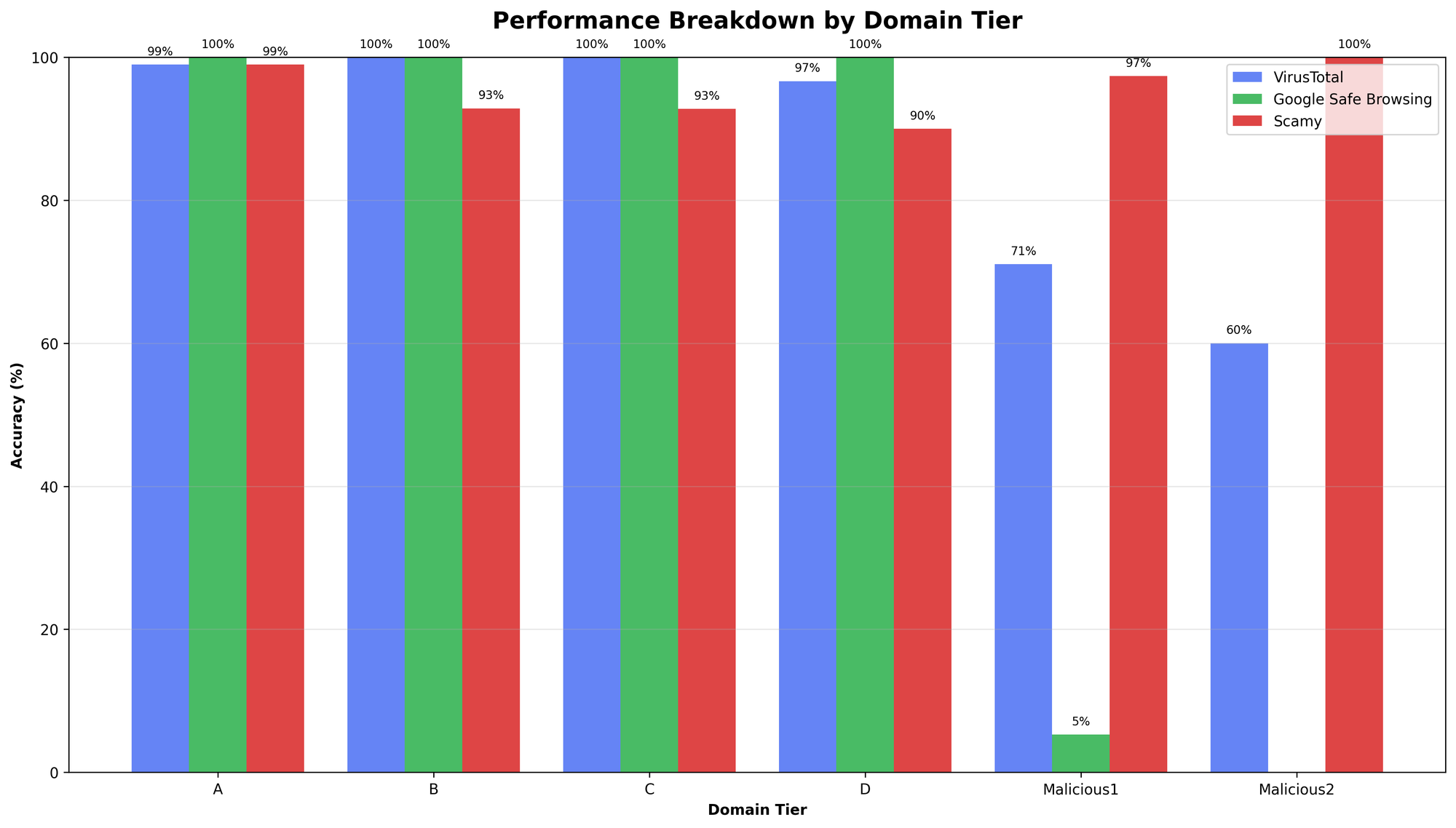

Breaking down performance by tier gives a clearer picture. VirusTotal and Google Safe Browsing showed a sharp drop in effectiveness against lesser-known fake websites, while Scamy's detection rate improved.

The major players seem to place less emphasis on websites that aren’t directly involved in malware distribution, although global scam losses reached over $1.03 trillion in 2024.

Example of a fake website that sells luxury items that is not flagged by any of the vendors.

Perfomance Metrics

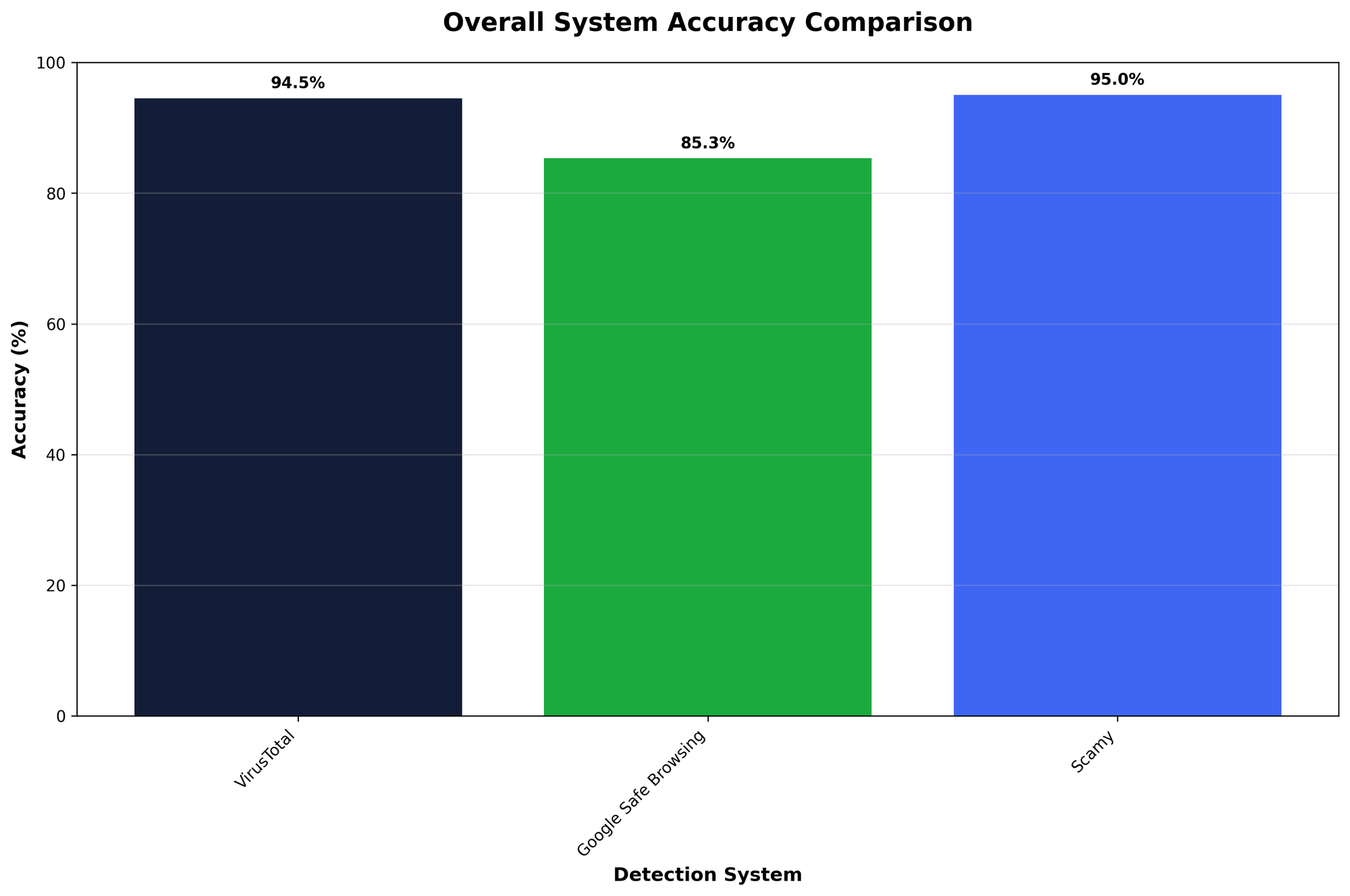

To understand these trade-offs more precisely, we compare accuracy, precision, recall, specificity, and F1-score:

| System | Accuracy | Precision | Recall | Specificity | F1 Score |

|---|---|---|---|---|---|

| VirusTotal | 94.5% | 95.1% | 67.2% | 99.4% | 78.8% |

| Google Safe Browsing | 85.3% | 100.0% | 3.4% | 100.0% | 6.7% |

| Scamy.io | 95.0% | 76.0% | 98.3% | 94.4% | 85.7% |

| ScamAdvisor | N/A | N/A | 77.6% | N/A | N/A |

The Performance Radar below shows a direct comparison of how these metrics translate into real-world detection trade-offs.

#5. The Detection Reality Check

Each tool comes with its own strengths. Some prioritize a smooth user experience with fewer false alarms, while others aim for maximum protection, even if that means occasionally flagging safe sites by mistake.

VirusTotal: Balanced, but with Gaps

Great precision (95.2%), so when it flags something, you can trust it’s dangerous. But recall of 69% means it still missed a third of malicious domains.

Google Safe Browsing: Conservative to a Fault

Perfect on legitimate sites—zero false positives. But recall of 3.4% means it failed to catch almost every malicious domain we tested.

Scamy.io: Aggressive but Effective

Excellent recall (98.3%), almost nothing slipped through. But it wrongly flagged 18 legitimate sites (false positive rate 5.6%), which could frustrate regular users.

ScamAdviser: Partial View

Caught 78% of malicious domains but missed 13. Since we couldn’t test it on legitimate sites, we don’t know if it also over-flags safe domains.

#6. Conclusions

No detection tool is flawless.

VirusTotal and Google Safe Browsing lean toward being cautious, while Scamy leans toward catching almost everything, even if it sometimes overreacts. ScamAdviser adds value, but its limitations mean it’s harder to judge.

Each of the tools we tested reflects a different philosophy of protection, and none are without trade-offs.

- VirusTotal offers balanced performance, with strong precision and a wide range of detection engines behind it, but it still leaves gaps in recall.

- Google Safe Browsing delivers a seamless user experience with zero false positives, yet its very conservative approach means it misses most malicious sites.

- Scamy.io focuses on maximum coverage, catching nearly all threats, but does so at the cost of more false positives.

- ScamAdviser provides useful insight into scam-related sites and trust scores, though its limitations in API access and testing scope make it harder to fully evaluate.

Ultimately, the right tool depends on what the end users values most: minimizing false alarms, maximizing protection, or aiming for balance.

Our view is that when it comes to online threats, even a single missed malicious link can be enough to cause serious damage, so a few extra alerts may be a safer trade-off than letting dangerous sites slip through.